Abstract

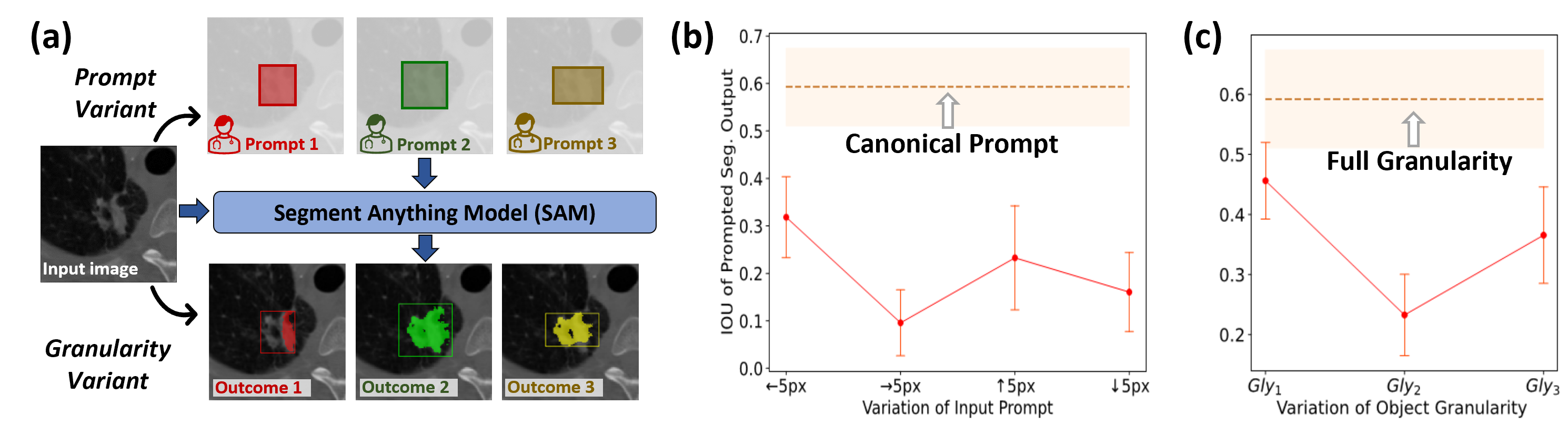

As the vision foundation models like the Segment Anything Model (SAM) demonstrate potent universality, they also present challenges in giving ambiguous and uncertain predictions. Significant variations in the model output and granularity can occur with simply subtle changes in the prompt, contradicting the consensus requirement for the robustness of a model.

While some established works have been dedicated to stabilizing and fortifying the prediction of SAM, this paper takes a unique path to explore how this flaw can be inverted into an advantage when modeling inherently ambiguous data distributions.

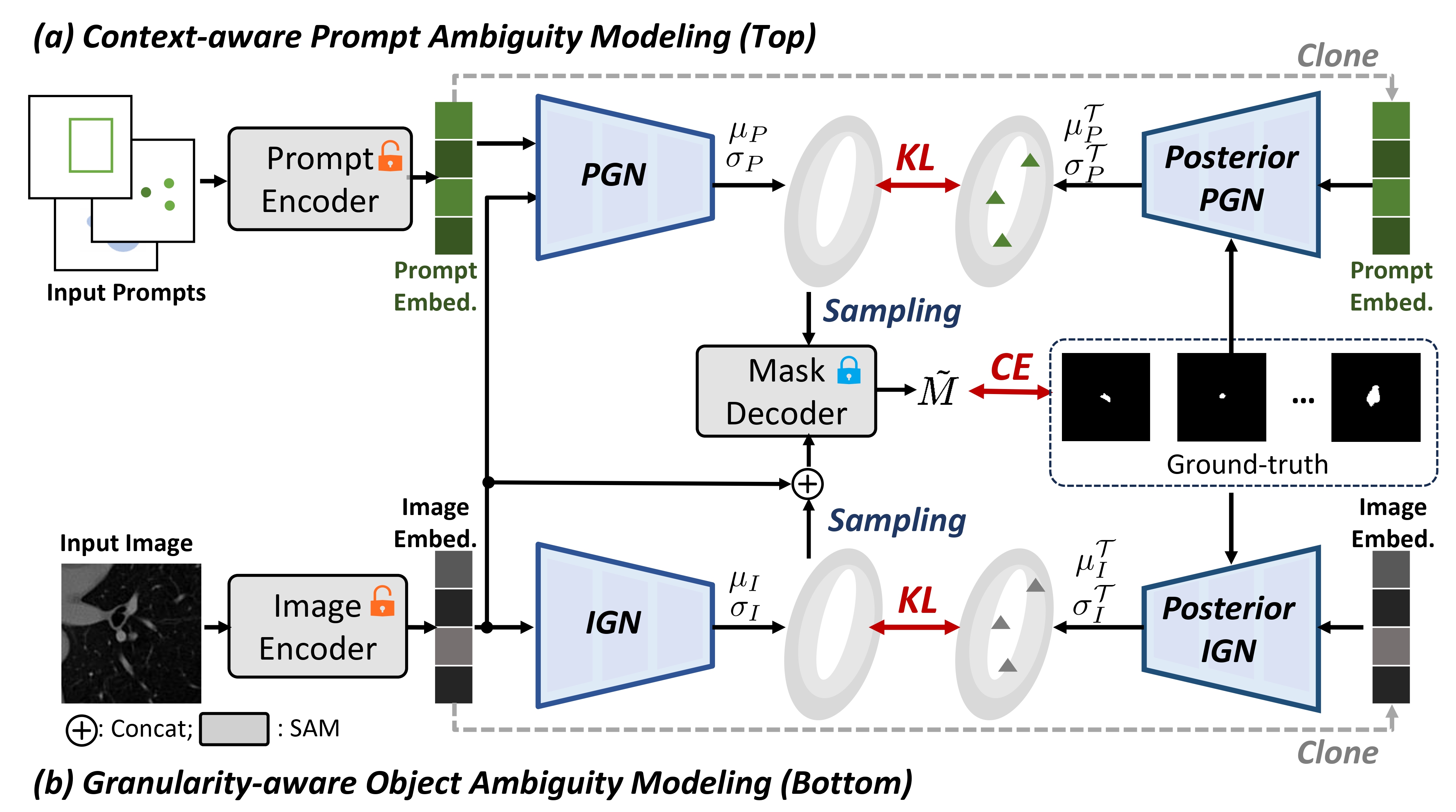

We introduce an optimization framework based on a conditional variational autoencoder, which jointly models the prompt and the granularity of the object with a latent probability distribution. This approach enables the model to adaptively perceive and represent the real ambiguous label distribution, taming SAM to produce a series of diverse, convincing, and reasonable segmentation outputs controllably. Extensive experiments on several practical deployment scenarios involving ambiguity demonstrates the exceptional performance of our framework.

Framework

Overview of 𝒜-SAM: We probabilistically model the prompt and object-level ambiguity by jointly probabilities the SAM embeddings with PGN and IGN, respectively.

Experiments

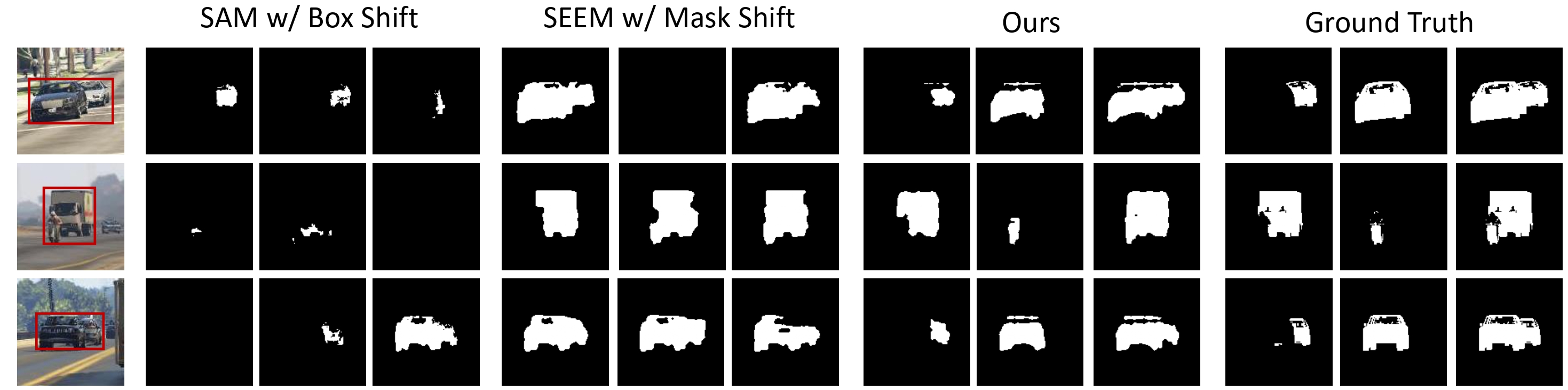

Comparison to Prompted Segmentation Models

Comparison to Conventional Ambiguous Segmentation Models

BibTeX

@inproceedings{liflaws,

title={Flaws can be Applause: Unleashing Potential of Segmenting Ambiguous Objects in SAM},

author={Li, Chenxin and Li, Wuyang and Liu, Hengyu and Liu, Xinyu and Xu, Qing and Chen, Zhen and Huang, Yue and Yuan, Yixuan and others},

booktitle={The Thirty-eighth Annual Conference on Neural Information Processing Systems}

}